SearchGPT, Perplexity, Google, and the Future of Search

November 1, 2024

Join 500+ brands growing with Passionfruit!

Surprise Surprise : OpenAI just announced ChatGPT Search, their competitor to Google, Bing, Perplexity, and the dozens of other search engines out there. This article dives into the future of search : how it will evolve, how different engines will “answer” differently, and how to evolve your SEO strategy to account for this shift in search experiences.

Actually, let’s be honest : this is not really a “surprise”. We knew this was coming, Perplexity and You have been around for a while, Google launched AI Overviews several months back, Bing had incorporated this for years without getting recognition, the list goes on.

In fact, we at Passionfruit had predicted and prepared for this several months back with The Impact of AI on Search Engine Algorithms & Optimization.

The Need for New Search Experiences

Before we dive into the details, let’s first understand why so many search competitors are coming up.

Don’t get me wrong : I really did like Google’s search experience, and they’ve done a phenomenal job at being #1 for the last several decades. They’re still the leader in the space, capturing more than 80% of search journeys globally, and I believe it will take at least 2-3 years for the new kids on the block to dethrone Google from the top spot. Google got the job done - it provided me with resources that I can refer to while trying to find products, or learning something.

However, over the last couple years, the omnipresence of low quality pages that were specifically optimized for Google’s search engine, led to a good chunk of the “10 blue links” being useless. I could still find what I was looking for by googling it, but I needed to spend a lot more time and effort to find the answer.

That’s where these alternate search experiences come in. They’re all built on top of large language models that aim to understand each website, and ideally, sift through the noise. The result? An answer with sources as opposed to a list of sources (the 10 blue links).

Personally, a lot of my day to day search journey related to debugging, or learning about specific coding libraries, now starts on Perplexity as opposed to Google → Stack Overflow, because LLMs really do have the ability of providing answers to navigational and informational queries by referring to the actual website. Without going into the legal aspect (for e.g. Perplexity being sued for training their models on another website’s content), the end user experience is really smooth. I get the answer to what I’m looking for in one query, versus having to open 4-5 different links and then finding the answer.

However, these alternate search engines still have a long way to go when it comes to making purchase decisions. The best example of this is when I’m looking to buy a physical product. Google Shop, with “AI powered” product recommendations, is currently way ahead of OpenAI, Perplexity, or the like at this point in time.

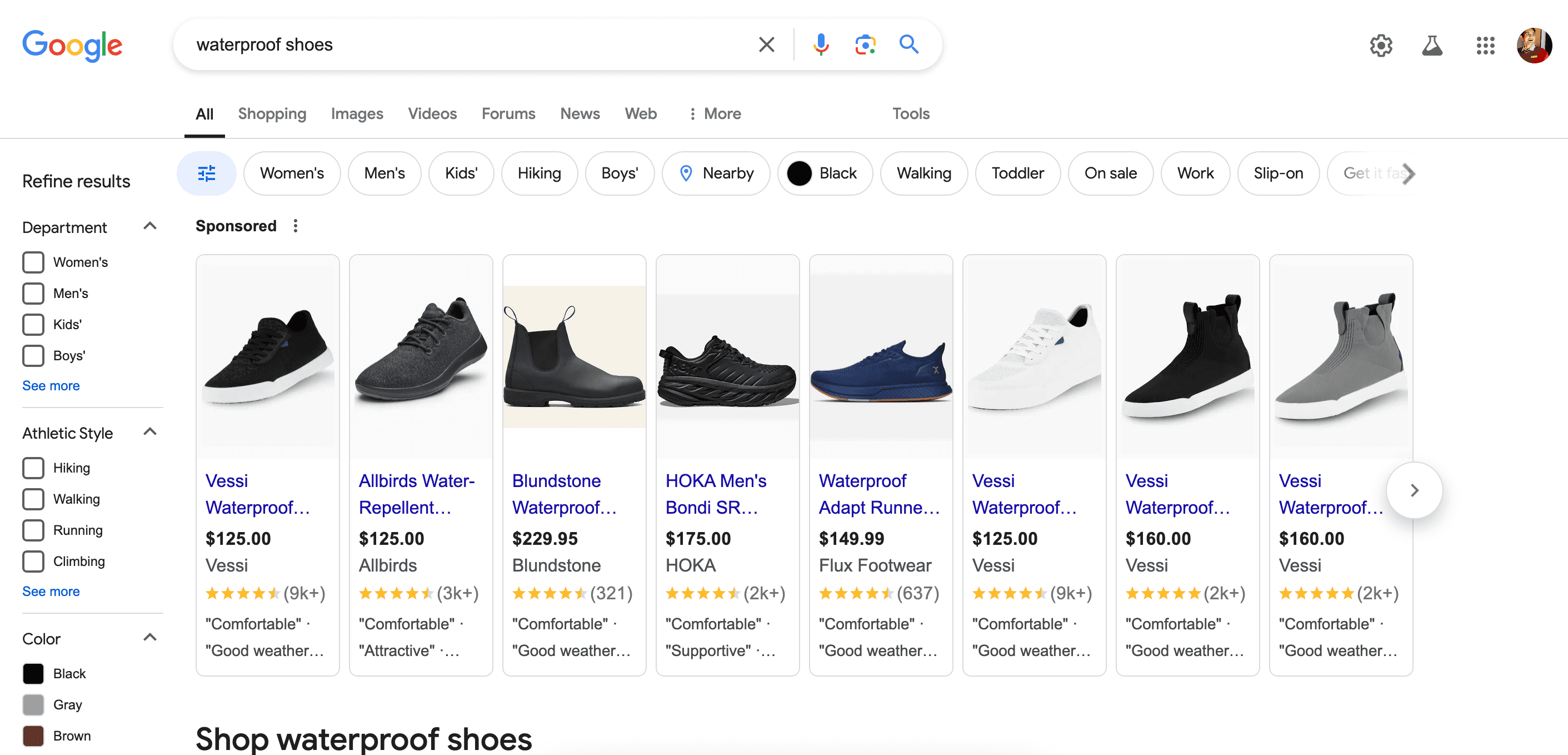

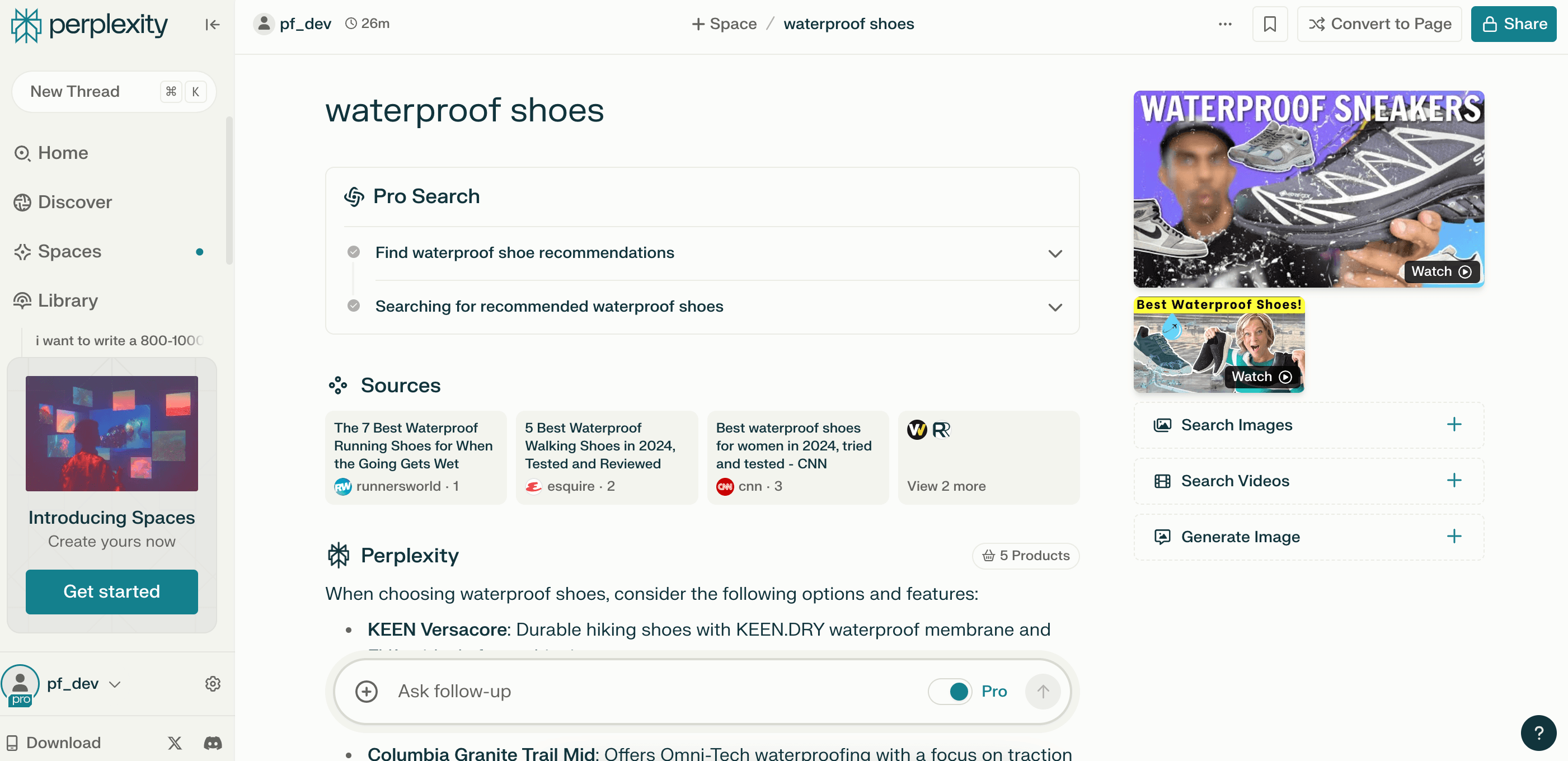

Let’s look at a real example of this. Let’s say I want to buy “waterproof shoes”. When I Google it, I see different products with images, ratings, reviews, and the ability to filter my search by color, style, gender, etc. Great. When I ChatGPT it, I just get a list of products with the image. When I Perplexity it, I get a response that’s somewhere in the middle : I get a list of product recommendations, along with links to the top products. Googling this, is still the best option out there.

Googling “waterproof shoes”

ChatGPT-ing “waterproof shoes”

Perplexity-ing “waterproof shoes”

The Evolution of Search Experiences

Product Snippets & Recommendations

The previous example is not to say that these alternate search engines will always be worse. Just that over time I’d assume these search engines build their own versions of Google Shop to display results in similar / enhanced ways.

To do so well, the engines need to understand the nitty-gritties of your product page : the cost, the SKU, the features, the reviews, and so on and so forth. Google is just in a great spot right now because they’re well integrated into your page schema and can understand and index your product pages easily if the schema is present. Further, because brands have also integrated with Google Shop, it is easier for Google to use that data to show featured products.

Other search engines are definitely working on that (disclaimer : this is an assumption, but I’m pretty confident on it 😀). Ultimately, every search engine wants to capture purchase decisions, because that’s what brands care about, and that’s how they can make money.

Conversational Queries

Another big change is the shift towards conversational queries. These alternate search experiences, and Google’s AI overviews, provide a way for users to converse with the search engine, as opposed to searching keywords. Let’s take a look at the below example. I am conversing with Perplexity, telling the engine that I am going to a city where it is raining, and I want shoe recommendations. The engine automatically figures out that I’d need waterproof shoes, and shows me results for the same.

This is huge. When you search this query, the engine automatically needs to detect your intent, come up with keywords, search those keywords in their database, find relevant pages, and then summarize the response. The engine detected that I needed waterproof shoes without me ever mentioning the phrase.

Perplexity : Conversational Query that maps to “waterproof shoes”

Multimodal Search

Another trend that we’re seeing is multimodal search - traditionally we search by text, but now you can search by image, voice, video, etc. This is definitely more relevant for E-Commerce companies, but if your end users are changing the way they search, you need to account for that and be where they are.

So.. what really happens when you search by uploading an image? Is it really that different than searching by text?

I believe that “image search” is a misnomer - it is not feasible to search through every image on google when someone uploads something. That would take too long, and take too much compute power because each image will have at least 200x200 = 40,000 pixels. Now multiply that with at least 1 billion since there are at least that many images on the internet. Every time someone does image search. Not feasible.

While there’s no official confirmation of how these different search engines do this, here’s what I think really happens when you search by media : when you upload media, the search engine first classifies that. For e.g. black shoes, gray laces, etc. It then simply searches by text. However, given that an image was uploaded, it prioritizes image search. How? By inspecting Image Alt Text and then Product Schemas.

Why do these Search Engines come up with Different Answers?

Let’s go back to the waterproof shoes example. Google’s top results came from REI, Shoe Mill, Amazon, Vessi. ChatGPT’s came from Real Simple, Travel + Leisure, People. Perplexity’s came from Runnersworld, Esquire, and CNN.

ChatGPT citations for “waterproof shoes”

Perplexity citations for “waterproof shoes”

Huh. Three different search engines. Three pretty different results. Yes, there is overlap in some sources, but this shows that different engines consider a different set of websites most relevant to the search query!

Now, this is somewhat expected. Each search engine believes that they will provide the most accurate answer, and if all of them had the exact same way of prioritizing websites, their answers would not be differentiated, which would then make their product as a whole undifferentiated.

So, why does this happen? And how can you optimize your website for different search engines? Or even a particular search engine?

The answer lies in how each engine understands the content of a webpage. Now, your web page is fixed : every engine has access to the same HTML, text, images, schemas, etc.

The difference lies in embedding models. At a very high level, embeddings are vector (numerical) representations of text. Since different engines have different embedding models, how they understand the same piece of text is different. When you search something, the engine first embeds your text, then compares that embedding to the embeddings of other pages, and then finds a list of the most relevant websites. Of course, there are several other aspects that also go into this : you can take a look at my previous piece on How Search Works to learn more. But the first point of difference lies in the difference of embedding models.

How to Evolve Your SEO Strategy?

Let’s be honest, you probably skipped everything I just said because you ultimately care about the “So, What?”. I don’t blame you 😀

The one disclaimer I do want to put before you take me to court is that the below are hypotheses that we’ve tested thoroughly and seen good results for from our own clients. Each website is different, and whoever tells you that there is a singular “playbook”is probably lying, or overselling. Further, although SEO has been there for decades, I really do believe that this is the second reckoning. Search has changed so dramatically over the last 2 years, and will continue to do so, and our goal at Passionfruit is to decipher and simplify this to create actionable insights, and drive results.

While there are several things you must ensure to foolproof your SEO strategy, these are some of the important ones.

First, it starts with evolving your Keyword Strategy. Instead of targeting a select range of short tailed keywords (1-2 words), we’ve seen that targeting more long tail keywords (3-5 words) has more impact on driving top of funnel AND bottom of funnel metrics. There are a couple reasons why this works. Firstly, when a user is searching for a longer term, it means that they know exactly what they want, or at the very least, are trying to find a niche. This correlates with higher purchase intent. Secondly, as conversational queries become more popular, it is more aligned with these longer tail keywords. Thirdly, longer tail keywords generally are searched less, which means the relative difficulty of improving your rank for them is easier.

Second, you need to ensure that your website is technically sound. As the different web crawlers become more complex, it is reasonable to assume that they will need to spend more time scraping each page. At the same time, because they’re still limited by crawl budget, they can only allocate a certain amount of time to scrape your entire website. This means that if you have a technically suboptimal website, crawlers may not even get through your entire website, which will lead to lesser indexed pages, etc.

Third, and this can arguably be part of Technical SEO, you must ensure that your important pages have complete and correct Schemas. This becomes even more important for E-Commerce folks, in particular for collection and product pages. Collection and Product Schemas allow search engines to easily understand the product information, and show it as Featured Products and Product Snippets. Google has also reiterated the importance of correct schemas 2 weeks back with the new version of Google Shop, basically saying the same thing.

Fourth, you need to know where your users are coming from. This will help you target different search engines uniquely, or double down on the important ones. If you can do so, you should integrate with different search engine embedding models to understand how different engines are understanding and ranking your web page. That’s what we’ve done at Passionfruit, and taken it a step further by building a custom model that scores each and every webpage for different engines and can suggest a best “overall” score.

Fifth, every website is different and what works for one does not work for another. You must identify what actions are working well for you, across the different stages of the funnel, and double down on those. Generic SEO templates will not help any more.

And lastly, you must ensure that your content has high experience, expertise, and a unique value addition. The concept of adding something unique is called information gain, and is good for search engine algorithms. Experience and Expertise help with user engagement. With the proliferation of AI content writing tools, everyone can publish hundreds of content pieces a month. However, what we’ve noticed is that purely AI generated content may work for a week or two, but soon starts getting demoted by engines. Worse, it may flag your website which will lead to broader losses. This is not because engines can properly “detect AI”. It is because users do not engage with purely AI generated content because it almost always does not add real value for them. AI is a great tool, but adopting a “human in the loop” process is the way to go.

Conclusion

This piece became longer than I expected, and there’s pages more I can write on it! This is one of the most exciting times for search : everything, everywhere is evolving, and it’s a great opportunity to build a strong, long term, organic presence for growing companies. We’re committed to keeping up to date with all things Search, and I’d love for your thoughts, feedback, and questions! Feel free to email me at rishabh@getpassionfruit.com to learn more!